Why not use Accept-Reject?

- In Accept-Reject, we:

- Choose some function $g(x)$ that is close to the distribution we want to sample $p(x)$

- Sample from $g(x)$ and accept with probability $\frac{f(x)}{Mg(x)}$

- Intuitively, the ratio $\frac{f(x)}{g(x)}$ tells us that we should prefer samples with high densities (big $f(x)$, likely to come from $p(x)$) or are rare candidates (low $g(x)$)

- For high dimensional data, it is hard to efficiently sample from $Mg(x) > f(x)$ because $M$ tends to be very large.

- Large M results in low acceptance probabilties (because $\frac{1}{M} \propto \text{acceptance}$)

- This means we need to sample a lot to get accepted samples.

display(Math(r'''

\begin{align}

\\NC = \int_{-\infty}^{\infty}f(x)dx

\\

\\\text{Bayes Theorem: } P(S|A) &= \frac{P(A|S)D(S)}{P(A)}

\\&=\frac{\frac{f(x)}{Mg(x)} \times g(x)}{P(A)}

\\

\\\text{rearrange: } P(A) &= \int_{-\infty}^{\infty}g(x)\frac{f(x)}{Mg(x)}dx

\\&=\frac{1}{M}\int_{-\infty}^{\infty}f(x)dx

\\&=\frac{NC}{M}

\\\text{rearrange: } P(S|A) &= \frac{f(x)/M}{NC/M}

\\&=\frac{f(x)}{NC}

\\&=p(x)

\end{align}

'''))

Solution

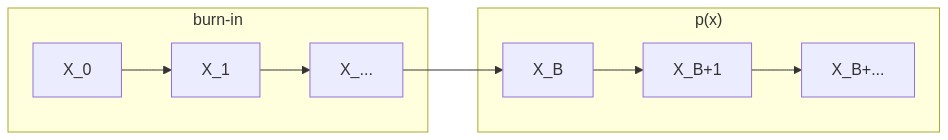

- Build a markov chain that has a stationary distribution that represents $p(x)$, then sample from it.

- Each draw from the distribution is dependent on the previous draw (Markov Chain)

- Simulate draws from the markov chain (Monte Carlo)

- Requires drawing from the markov chain for some "burn-in" period before converging to $p(x)$.

- To guarantee that the markov chain converges to $p(x)$, you need to satisfy the detailed balance condition:

$y \rarr x$. $\forall{x,y} p(x)T(y|x) = p(y)T(x|y)$ -- The probability of going $x \rarr y$ is the same as the probability of going

$\sum{p(x)T(y|x)} = \sum{p(y)T(x|y)}$ -- Therefore the sums of those probabilities is the same

$pT = p$ -- Therefore we reach steady state

Metropolis-Hastings

The transition probability is equal to your likelihood of going from A to B times your likelihood of going from B to A. $$ \\ \forall{a,b} \\ p(a)T(a\rarr b) = p(b)T(b\rarr a) \\ \frac{f(a)}{NC}g(b|a)A(a\rarr b) = \frac{f(b)}{NC}g(a|b)A(b\rarr a) \\ \frac{A(a\rarr b)}{A(b\rarr a)} = \frac{f(b)}{f(a)} \times \frac{g(a|b)}{g(b|a)} $$

Here, we note that $r_f = \frac{f(b)}{f(a)}$ and $r_g = \frac{g(a|b)}{g(b|a)}$

For $r_fr_g <1$, $A(a\rarr b) = r_fr_g$, and $A(b\rarr a) = 1$ For $r_fr_g \ge1$, $A(a\rarr b) = 1$, and $A(b\rarr a) = \frac{1}{r_fr_g}$

Therefore, $A(a\rarr b) = \min{(1, r_fr_g)}$

At each step, we will sample some function $g(x_{t+1}|x_t)$. This function can be any distribution, e.g. $\mathcal{N}(x_t, \sigma^2)$